Article feedback/Public Policy Pilot/Design Phase 2

This document is out of date and no longer being maintained.

This page describes the design for phase 2 of the Article Feedback Tool pilot. This document is a companion to the phase 1 design document and follows from there, changes for phase 2 being described here. This document assumes that the reader is familiar with the phase 1 design.

Scope

[edit]

For phase 2 of the Article Feedback pilot, we want to address the following issues:

- "Expired" ratings. (if not defining the formula used, at least developing a system whereby that formula can be applied). This is both a feature modification and a behavior change.

- Clearing ratings. The ability for users to "clear" a rating of any values they had previously applied. This is a feature modification.

- Modified Survey. Different questions, perhaps targeted clearer.

- Post-rating "calls to action". We want to see if the simple act of rating an article can easily serve as a gateway towards editing or creating an account. This is both a feature modification, a behavior change, and a data tracking experiment.

- Post-rating Survey Push. We want to be more aggressive about asking people to take our survey. This is a behavior change.

- Expert self-identification. We want to see if people self-identify as being knowledgable in the topic, and if so, what their rating patterns are.

Stretch Goals

[edit]- Performance increase. It would be good to know that the tool will not collapse after a certain threshold.

- Histograms. Having better visualizations would be good.

Positional A/B/C Testing

[edit]The tool will be tested across multiple positions on the page in order to help identify the optimal location for user participation. Three positions have been chosen, each with slight behavior changes:

- A - The tool is located at the bottom of the page. It is already visible; there is no need to activate it.

- B - When activated, the tool opens as a modal dialog over the page. The trigger for activation is a text link in the page sidebar.

- C - When activated, the tool opens as a modal dialog over the page. The trigger for activation is located on the right side of the page, below the search box (in the same general area as protection icons appear).

- This version will need tweaking to handle various cases such as pages that are placed under Pending Changes control.

Calls-to-Action

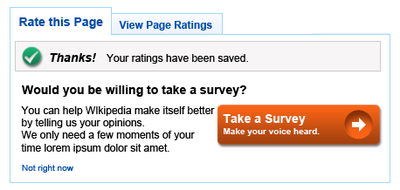

[edit]Upon submission of the ratings, the tool will return with a success message and one of three possible calls-to-action. The various calls will be based around the user's state (for instance, we should not suggest that a user create an account if they are logged in).

The fallback call - the most common one - will be an exhortation to edit the page in question.

The actual language used in the calls is to be determined.

The calls are:

- Survey - this call asks the user to take a short survey about the Article Feedback Tool and what they think. This call will only appear if the user has not taken the survey.

- Create Account - This call suggests that the user create a user account. This call will only appear if the user is anonymous

- Note: it is not possible to transfer ratings given as an anonymous user to a new user account. This may create confusion on the part of the user if they go on a rating spree and then create an account.

- Edit the Article - This call suggests that the user make edits to the article. This is the fallback call-to-action.

- Ideally, we will be able to detect what kind of ratings the user submitted and tailor the call to those ratings. For example, if the user indicates that the article is poorly sourced, the language may suggest that they find and add reputable sources.

Additional calls-to-action can be engineered, such as "joining the conversation" and such not.

Call-to-Action Design Elements

[edit]The system is designed to easily allow new calls-to-action to be loaded and used. Each individual call consists of multiple parameters. The success/confirmation messages are parameters to the screen so that we can test language style. The parameters are:

- Confirm Title - A single word headline, such as "Thanks" or "Success!"

- Confirm Text - One sentence indicating that the action succeeded.

- Pitch Title - A headline.

- Pitch Text - This is a short block of text that describes what the call-to-action is about

- Pitch Icon (optional) - This is a graphic designed to help attract attention to the call-to-action

- Accept Button Text - This will fire off the process within the specific call-to-action (e.g., load the survey, or start the account creation wizard)

- Reject Button Text - This will dismiss the call-to-action and return the tool to it's "rated" state.

Survey Inclusion

[edit]If the user is given the survey call-to-action and selects for the survey, the survey itself should load in the main tool window.

Expert Self-Assessment

[edit]The expert self-assessment system begins with a simple checkbox asking if the user has knowledge in the topic. If the user indicates that they do, additional checkboxes and selection options will become visible. The user may then select the knowledge type which best suits their degree of self-assessment.

Back-End Requirements

[edit]A/B/C Test Support

[edit]One primary intent for phase 2 is the inclusion of a series of A/B tests based on the display and the display's position. To support this, the following changes must happen:

- One or more columns (as needed) must be added to the database tables. This column will be used to store which "style" of the tool the user was given and rated. The style will be sent to the server through the API.

- This could probably be an integer. There are five total defined "styles" for the tool:

- 0, the "phase one" style (all old entries will be given this value)

- 1, phase 2 style, page bottom location.

- 2, phase 2 style, sidebar activation

- 3, phase 2 style, top of screen activation.

- This could probably be an integer. There are five total defined "styles" for the tool:

- A mechanism to store on the client a cookie which indicates which style they have been bucketed into.

- A mechanism to generate the style selected for the user.

While it would be ideal to store style values for logged-in users on the server (thus ensuring that they have the same experience during the testing phase, regardless of their client), development timeframe will prevent this.

Determining Applied Style

[edit]When a user visits a page that should display the tool, the following happens:

- The server checks for the value of the article feedback "style" cookie. If the cookie is present and the value of it is valid, the system produces that style of display.

- If the value is missing or invalid (a value of 0 or higher than 3), a new style value will be generated and placed on the client.

The value of the style cookie should be able to be overridden via an url parameter to enable testing.

Expired Calculation

[edit]A user's ratings are considered "expired" when:

- The article has achieved 30 revisions or

- The article has been modified +/- 35% of its size or

- The article has achieved 15 revisions and +/- 20% of its size

Ideally, state calculations could be defined within the server's LocalSettings configuration file to allow for changes to them without redeployment of the extension. Even having the numbers able to be defined there will be useful.

Tool Design

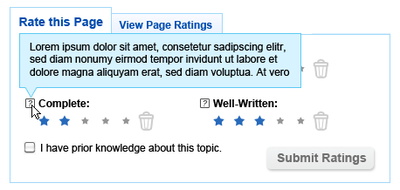

[edit]General Changes from Phase One

[edit]- Metric labels are now located above the star bars themselves.

- This helps to solve two issues: Extra-long labels (e.g., from translations) and right-to-left language layouts.

- The "help" icon is now located to the right of the metric label.

- Empty (grayed) stars are now smaller in size than selected stars.

- The labels have been changed to better account for what readers expect. The previous labels were editor-centric. The new labels are "Trustworthy," "Unbiased," "Complete," and "Well-Written".

Stale and Expired Ratings

[edit]In the phase one design, as a user's ratings became "stale", the color of the stars changed. With the addition of "expired" ratings in phase 2, the concept of "stale" ratings has become less important (and perhaps overcomplicated).

Accordingly, there are no indicators of stale-ness. The user is notified when their ratings have expired through a screen message. Star color does not change.

The expired message includes a link to a page that explains how and why the ratings expire. This page should also have an exhortation to help or join the workgroup for the tool.

Submission Button

[edit]During UX interviews, it came to light that users faced some confusion as to whether or not they could submit just one rating or if they had to submit all four. While the design of phase one started with a disabled "submit" button that then became active after the user clicked a single star bar, the visual change of the button was apparently not enough to indicate that submission could continue.

Additionally, some users expressed confusion as to whether or not they even needed to click "submit" (possibly due to other rating systems having immediate submission).

To combat this, the submit button will have a much more active treatment change between active and disabled.

The text for the "submit" button shall change depending on whether or not the user has submitted ratings before.

- If the user has never rated the article, the text shall say "Submit Ratings"

- If the user has rated the article before, the text shall say "Update Ratings"

Clear Ratings

[edit]The tool includes a "clear rating" control for each rating value. This allows the user to return to a "null" state for the rating (there are no zero values used in the calculations).

The control will have a hint but on rollover in the rating section will become a full control.

Clearing a rating will act as if the user had never provided a value in the first place. Internally, the "new" value will be stored as a "0" and will be specifically ignored in calculations and aggregations of the data.

Clearing a rating is not the same thing as indicating "zero stars".